Composition

As has already been hinted at, the power of the command line system is not so much how amazing (or not) the individual commands are, but how well those commands can be easily combined together to give new desired behavior. The reason for this is, in any graph, the number of edges can grow much faster than the number of nodes.

That is, consider a system with just 10 programs. If these programs can only be used independently of each other, as is often the case with GUI programs, then we have 10 programs that we can run. If each of these programs can be combined with one of the others though, then we also have 10 x 9 = 90 compositions we can run. Further, when we get another program, if it only stands on its own, the we have increase our options by 1 (from 10 to 11). If it can be combined with the others though, the our combinations increases by 20 (from 10 x 9 = 90 to 11 x 10 = 110).

In order for programs to compose, they need to talk a common language. In Linux, with its Unix heritage, this is text. As Doug McIlrory, who invented Unix pipes said,

This is the Unix philosophy: Write programs that do one thing and do it well. Write programs to work together. Write programs to handle text streams, because that is a universal interface

The other universality you will find in Linux is a huge amount of information about the operating system itself is exposed as text files. This allows all programs to consume and manipulate this information. To quote the Unix Architecture page on Wikipedia

With few exceptions, devices and some types of communications between processes are managed and visible as files or pseudo-files within the file system hierarchy. This is known as everything’s a file.

As a simple example of this lets look at the /proc/cpuinfo and /proc/meminfo files using the cat command

which prints all the files it is given to the screen one after another (concatenates them together)

[tyson@gra-login3 ~] cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 79

model name : Intel(R) Xeon(R) CPU E5-2667 v4 @ 3.20GHz

...

[tyson@gra-login3 ~] cat /proc/meminfo

MemTotal: 131624960 kB

MemFree: 27484820 kB

MemAvailable: 53464260 kB

SwapCached: 42912 kB

...

Having all this information available to us as simple text files gives us a lot of power in our shell. Things that

would be only available by writing a program to make a special operating system (OS) call in another OS, can be

retrieved and manipulated with our basic file commands like cat in our shell.

Viewing and editing

One of the challenges in preparing a course is to come up with interesting examples. Several years ago, my wife’s cousin took her flying in a glider. She really enjoyed the experience, and a couple of years ago we joined the London Soaring Club to learn how to fly gliders too. This has proven to be a lot of fun. To give you a better visual, here is a random picture from the internet of a trainee in a two-seater trainer (the instructor sit in the seat behind you).

The gliders all have GPS trackers in them, and the club has a variety of awards that are awarded each year based on the traces (e.g., who gained the most altitude, who flew the furthest, etc.). Going through these files by hand is a tedious chore, but it is easy to automate with the command line, and is typical of the sort of data and log file real-world pre- and post-processing we frequently have to do as researchers.

The flights.zip file we have downloaded and unpacked contains a variety of glider flight traces and we are going

to use them for our exercises now. We have already introduced the cat command to display the entire contents of a

file (or multiple files). Often we are only interested in the start or end of a file though. The head and tail

commands allow us to extract just the start of end of a file. Both show ten lines by default, but can be told to

show an arbitrary number with the -n <number> option. Using this to look at one of the igc files

[tyson@gra-login3 ~]$ head flights/0144f5b1.igc

AXCSAAA

HFDTE030816

HFFXA050

HFPLTPILOTINCHARGE:Lena

HFGTYGLIDERTYPE:L23 Super Blanik

...

[tyson@gra-login3 ~]$ tail flights/0144f5b1.igc

B2116474309137N08057022WA002870042000309

B2116524309138N08057024WA002870041800309

B2116574309138N08057024WA002870041800309

B2117024309138N08057024WA002870041800309

B2117074309138N08057024WA002870041800309

...

We see the files are composed of a series of records. Googling will give the full igc file specification, but, for our purposes, the records of interest are the date record, the pilot record, the plane record, and GPS position record

HFDTE<DD><MM><YY> (date: day, month, year)

HFPLTPILOTINCHARGE:<NAME> (pilot: name)

HFGIDGLIDERID:<CALLSIGN> (plane: call sign)

B<HH><MM><SS> (time: hour, minute, second,

<DD><MM><mmm>N (latitude: degrees, minute, decimal minutes)

<DDD><MM><mmm>W (longitude: degrees, minutes, decimal minutes)

A<PPPPP><GGGGG> (altitude: pressure, gps)

<AAA><SS> (gps: accuracy, satellites)

Linux also comes with a variety of text editors. The two most common ones are emacs and vi, both of which were

created in 1976, have an almost cult-like following, and will seem quite strange to the uninitiated. The quick

reference guide contains the basic key strokes/commands to use these editors. For a new user, the most important

thing to know is how to exit these editors if you accidentally get into them

emacs- pressCTRL+x CTRL+cvi- type:q!

where CTRL+<key> means to press CTRL+c

written is ^c and C-c.

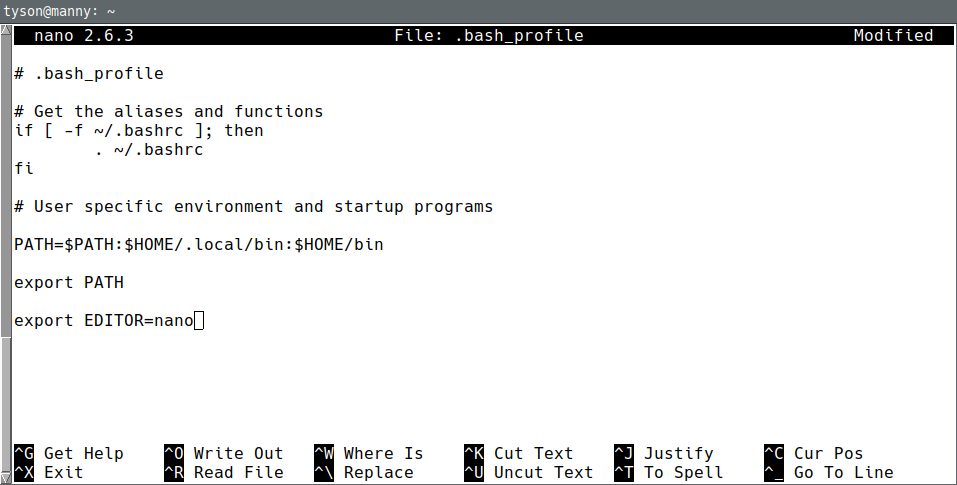

For a first time user, nano is likely a good choice for making simple edits. It is a basic no-frills editor that

lets you move around with the cursor keys and make edits as most people expect. The key sequences to exit, save

(write out) the file, and so on are printed at the bottom of the screen using the ^<key> format (e.g., press

Sometimes, when you run a command, it will start an editor for you to edit something. Chances are this editor will

be vi and you will need to know the :q! sequence to get out. You can set a person default editor by setting

EDITOR environment variable to the editor you want

export EDITOR=nano

There are actually many such settings that programs inherit from your command line session, and even more that are just specific to bash and not inherited by other programs. They are documented in the various commands manual pages. The commands in ~/.bash_profile (~ mean /home/tyson) are always run at each login, so it is a good place put such setting that you always want set.

We will do this now to demonstrate editing a file.

[tyson@gra-login3 ~]$ nano .bash_profile

After editing ~/.bash_profile (runs every login) or ~/.bashrc (runs every time bash runs), always test you can

still login with a new ssh session in a new terminal. These are startup files, and some errors can leave you

unable to login, which will be impossible to fix unless you still have an active sessions running to fix things.

Exercises

An example of something we may want to do with these files is determine which pilot each file belongs to in order to give each member a copy of their file. In this exercise we are just going to use our new commands to look at the files a do a few operations by hand to get an idea of the sort of things we will be automating.

Using

headto look at a few (say 3-5) of the igc files (refer to the igc file specification given earlier) and answer the following questionsa. who the pilot is, and

b. what the year was.

For each of these files first few files, use the

mkdirandcpcommands toa. make a directory for that pilot (if required),

b. make a directory in the pilot’s directory for that year (if required), and

c. copy the file into the pilot/year directory.

What happens if you run

cat,head, andtailwithout any filename argument? In trying this, be aware thatCTRL+ccan be used to abort most command andCTRL+dsignals the end of keyboard input.

Regular expressions and globing

The grep (global regular expression search) command searches through files for regular expressions. Regular

expressions are a sequence of characters that define a search pattern. Variants of them are supported by a wide

range of applications, including google sheets, and are well worth learning. A very simple usage would be to use

grep to extract all the line that starts with HFPLT from an igc file

[tyson@gra-login3 ~]$ grep ^HFPLT flights/0144f5b1.igc

HFPLTPILOTINCHARGE:Lena

As can be seen in the above example, a regular expression is simply a sequence of regular characters that match

themselves plus some special characters like ^ that match things like the start of the line. The most basic

matches supported by almost all regular expressions are (these are covered in our quick reference guide and the

grep manual page too)

^- match start of line$- match end of linecharacter - match the indicated character

.- match any character[…]- match any character in the list or range (^inverts)(…)- group…

|… - match either or?- match previous item zero or one times*- match previous item zero or more times+- match previous item one or more times{…}- match previous item a range of times

Regular expression implementations frequently differ in what special characters have to be escaped (proceeded with

a \) to have the above special meaning or not. For example, grep supports both basic and extended regular

expressions where the former requires several of the special characters to be escaped to have their special meaning

and the latter does not.

A more complex example would

[tyson@gra-login3 ~]$ grep '^B.*A.......5..' flights/0144f5b1.igc

B1837024309903N08053926WA009230050102706

B1837074309873N08053817WA009200051002706

...

which gives all GPS trace lines with a GPS altitude recording of 500-599 meters.

In this example we have enclosed the regular expression in single quotes. This is because bash also has sequences

that it treats special, and this includes * which, without the single quotes, indicates a glob pattern for

pathname expansion. Glob patterns allow us to specify pathnames with the following wildcards

*- match any number of characters (include none)?- match any single character[…]- match any character in this list

When bash sees unquoted versions of these in a command line, it replaces the pattern with all pathnames that

match the pattern. This allows us to easily run commands like extract the pilot line from all the igc files

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc

0144f5b1.igc:HFPLTPILOTINCHARGE:Lena

04616075.igc:HFPLTPILOTINCHARGE:Bill

...

It is important to realize is that it is bash that literally replaces the pattern with all the matching

pathnames. The command that is being run never sees the patterns. It just gets the list of files, exactly as if we had

typed in all the filenames ourselves.

There are actually many such expansions that can occur, and sometimes it is useful to know what the command really

being run is. The set -x command tells bash to print out each command it runs, and set +x tells bash to stop

printing them out. We can use this to see exactly how our pattern gets expanded into the command that is run

[tyson@gra-login3 ~]$ set -x

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc

+ grep --color=auto '^HFPLT' flights/0144f5b1.igc flights/04616075.igc ...

0144f5b1.igc:HFPLTPILOTINCHARGE:Lena

04616075.igc:HFPLTPILOTINCHARGE:Bill

...

[tyson@gra-login3 ~]$ set +x

This also shows something I hadn’t intended to talk about. The grep command is automatically being provided an

--color=auto option. This is done with the bash aliases feature which can provide default arguments and short

forms for various commands. It is also commonly used to make -i (verify) a default for many commands like

rm. You can view the current set of alias with the alias command and read more about them in the bash manual

page.

Redirection and pipes

Now that we have a simple way of extracting all the pilot and date fields from all the igc files, we need to use a

redirection to save it in a file so we can process it further. Redirections are specified at the end of the command

line with the < and > characters. A simple mnemonic is that they are like arrows re-directing the input and

output, receptively from and to a file. An example redirection to save out list of pilots to pilots would be

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc > pilots-extracted

Running this command produces no output as all the output has been redirected from the screen to the pilots file,

as we can easily verify by loading pilots in our editor or printing it out using cat

[tyson@gra-login3 ~]$ cat pilots-extracted

flights/0144f5b1.igc:HFPLTPILOTINCHARGE:Lena

flights/04616075.igc:HFPLTPILOTINCHARGE:Bill

flights/054b9ff8.igc:HFPLTPILOTINCHARGE:Lena

...

We only want the pilot names though, not the filename and the HFPLTPILOTINCHARGE: field identifier. To remove

these we can use the cut command which lets us cut out bits from a line. The pilot names always start at the 41st

character in this output, so one way to do this would be to specify -c 41-, which means give me from character 41

to the end of the line. A simpler way though is to note the line is broken in parts with the : character. With

this observation, we can use -d : to break it up into three pieces as -f 3 to select the third

[tyson@gra-login3 ~]$ cut -d : -f 3 pilots-extracted

Lena

Bill

Lena

...

[tyson@gra-login3 ~]$ cut -d : -f 3 pilots-extracted > pilots-names

The only issue we have now is we have each pilot listed for each flight they took. We would like to have each pilot

listed only once. As it happens, there is uniq command that removes duplicate lines. On closer reading of the

manual page though, it becomes apparent that uniq only removes duplicate lines if they are adjacent, and states

that you have to run your file through the sort command first. Doing this we get

[tyson@gra-login3 ~]$ sort pilots-names > pilots-sorted

[tyson@gra-login3 ~]$ uniq pilots-sorted

Aasia

Bill

Fred

...

Creating a bunch of intermediate files simply to feed the output from one command into the input of the next

command is tedious though, so bash provides a | (pipe) syntax to do this for us. With this syntax we can

eliminate all the temporary files in

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc > pilots-extracted

[tyson@gra-login3 ~]$ cut -d : -f 3 pilots-extracted > pilots-names

[tyson@gra-login3 ~]$ sort pilots-names > pilots-sorted

[tyson@gra-login3 ~]$ uniq pilots-sorted

and collapse it down to just

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc | cut -d : -f 3 | sort | uniq

Aasia

Bill

Fred

...

As a small point of clarification, this pipe command is not creating temporary files and providing them to the commands. Rather it redirecting the (screen) output of each program into the (keyboard) input of the next one. This works as all the above commands (and most others) get their input from the keyboard if a filename is not specified (this came up in an earlier exercise). The above is then technically equivalent to

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc > pilots-extracted

[tyson@gra-login3 ~]$ cut -d : -f 3 < pilots-extracted > pilots-names

[tyson@gra-login3 ~]$ sort < pilots-names > pilots-sorted

[tyson@gra-login3 ~]$ uniq < pilots-sorted

Exercises

In these exercises we are going to create a series of pipelines for extracting key bits of information from our igc files. In the next section we will be converting these pipeline commands into shell scripts.

The key to to building a successful pipeline is to build it up slowly and test each addition. Instead of typing in an entire pipeline, running it, and then not knowing where it went wrong, test each stage and get it correct before adding the next. This is very easy to do as the up arrow brings up the previous commands run for further editing and re-running. For example

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc | head

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc | cut -d : -f 3 | head

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc | cut -d : -f 3 | sort | head

[tyson@gra-login3 ~]$ grep ^HFPLT flights/*.igc | cut -d : -f 3 | sort | uniq

First an output redirection question though.

What is the difference between redirecting output with

>and>>(hint, try running the same redirected command twice and see what happens to the output file in each case).Create pipelines to extract

a. the flight date from a single igc file,

b. all flight years from all igc files,

c. all GPS time records from a single igc file, and

d. all GPS altitude records from a single igc file.

Extend the GPS time record extraction pipeline

a. to give the starting (first) time, and

b. to give the finishing (last) time.

Extend the GPS altitude record extraction pipeline to give the highest altitude.